In my last post, we went through the basic imports required to load and generate basic statistics about your structured (rectangular) data. In this post, we will be going further to generate a schema for your data and perform comparisons between your training and test data before further analysis. This post does not attempt to be a comprehensive manual for TFDV, it rather aims to familiarise the reader with basic data concepts and how to go about applying functions included in the TFDV package

The following cover all imports required for this tutorial

import tensorflow as tf

import tensorflow_data_validation as tfdv

import pandas as pd

from tensorflow_metadata.proto.v0 import schema_pb2

PermalinkSchema

A data schema is essentially a blueprint for your data, containing elements such as columnar data type, expected value range, the number of unique values for a given attribute, the domain for a given attribute, amongst others. Directly from the TFX documentation, a schema includes:

- The expected type of each feature.

- The expected presence of each feature, in terms of a minimum count and fraction of examples that must contain the feature.

- The expected valency of the feature in each example, i.e., minimum and maximum number of values.

- The expected domain of a feature, i.e., the small universe of values for a string feature, or range for an integer feature.

To generate a data schema, we first need to generate a DatasetFeatureStatisticsList object.

schema = tfdv.infer_schema(statistics=stats)

(To see the process of doing this, check the previous post)

We can then display the schema by calling display_schema() as shown below. The displayed table shows the output for the UCI Census Income dataset.

tfdv.display_schema()

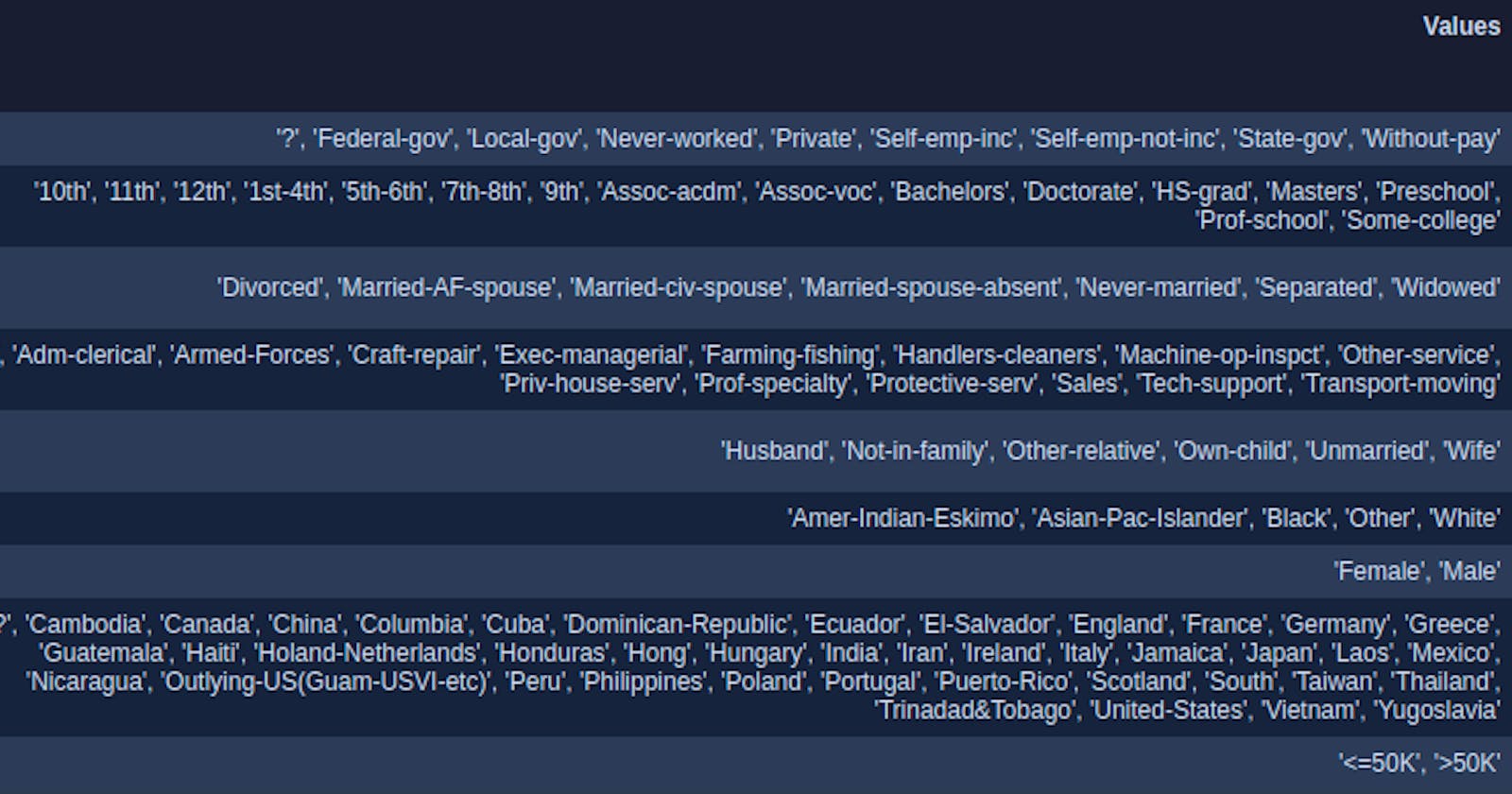

In addition to the data displayed above TFDV generates a list of legal values present for each attribute. This list of legal values describes the domain of the data, and good principles of data design necessitate consistent domain values for a given attribute.

This ensures that data tuples can be compared against each other in a logical manner, for example the attribute value "divorced" below only makes sense in the context of marital status, attempting to compare "divorced" to an out-of-domain value, such as "China" makes no logical sense, and a comparison between values of differing domains is in data terms, illegal.

It's not entirely relevant to know how TFDV stores your schema under-the-hood, however for the sake of completeness, the infer_schema() method returns a protocol buffer containing the result. This object can be easily updated and follows a data-structure format similar to a type JSON

PermalinkValidating New Data

The power of generating and storing your data schema is in cross-referencing your existing (expected) data schema against newly generated data. For example, let's say we generated Statistics for some new dataframe

new_stats = tfdv.generate_statistics_from_dataframe(new_df)

We can then use our existing schema object and call validate_statistics() passing in our new new stats object and our existing data schema as follows:

anomalies = tfdv.validate_statistics(statistics=new_stats, schema=schema)

If any anomalies exist in your new data, the above call displays plain text explaining the type and magnitude of data anomaly. A list of these data anomalies can be found here

We will not cover fixing every single anomaly, since this is on a per-anomaly basis and tends to vary wildly based on your specific use case. For a more detailed look at treating with data and schema anomalies, see Checking for Errors on a Per-Example Basis